‘Persuasion architectures’ are big business, and they’re surreptitiously targeting your kids anytime they use a device; playing on their love of shiny things, as well as their naivete when it comes to veracity and safety on the internet to make them click on the next ad, or watch the next video. There is a myriad of sources available for online content, so let’s just stick to YouTube, for now, to avoid getting bogged down in the terrifying amount of data that is being gleaned, stored and extrapolated about your daily life every second…and believe me, it is. Questions are being raised from a number of sources about exactly how much social media is ‘spying’ on you, with several journalists investigating whether or not Facebook (and by extension, Instagram) are actually listening in on your phone to glean more information about what you like, do and know in order to target advertising to you more directly. But what we’re discussing here is the use of the same AI to target your kids (and it’s abuse by trolls to do the same) specifically on YouTube and YouTube Kids.

One thing I always tell my students is that ‘Computers are dumb.’ What I mean by this is that computers don’t reason, they can’t be persuaded. They won’t make leaps of intuition or share your emotions on a topic. They simply understand parameters – if something fits the parameters, it is ‘True’. Beyond that, the machine knows nothing. And so it’s easy to use something like YouTube’s search algorithm, or it’s ‘Up Next’ playlist feature to cause chaos online – the AI doesn’t know it’s serving up fake Peppa Pig videos to your kids, as all the tags and metadata for the episode are ‘True’ based on what you asked for. The disconnect happens when young children are unable to determine official content from ‘off-brand’ content made by trolls or internet denizens who find it funny to mess with your kids and show them creepy videos of Peppa being run over, or visiting a sadistic dentist. Yet another reason to teach kids about veracity and checking your sources in this age of widespread misinformation and (maddeningly oxymoronic) ‘alternate facts’.

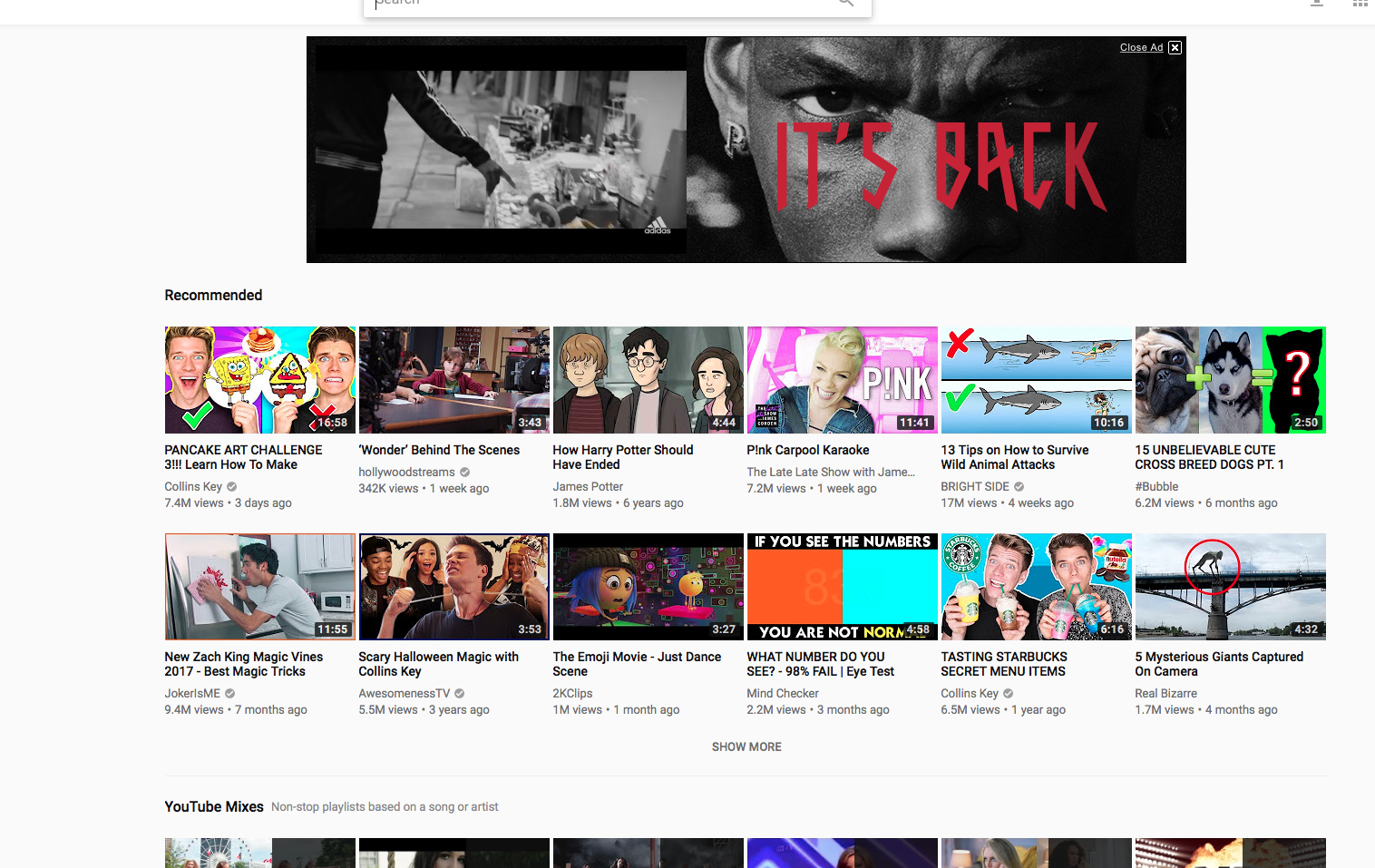

The problem is that most young kids can’t tell the difference between the ‘real deal’ Disney content and the knock-off troll fodder, so it feels fairly exploitative of the content creators to use beloved children’s characters in an attempt to garner views or to try and show them scary/offensive content for a laugh. More than this though, is the way they are injected into the rankings for search results and playlist content using tags – the little bits of data you add to your videos (which includes the title and the description as well as added metatags) to describe and explain them to the machines, exactly for the purpose of being found in searches. Let’s say I’m a troll, and I want my video of Peppa Pig to be seen by as many young kids as possible. One way to do this is to add tags which will link to my video from other places kids might go; I’d add #kindersurprise, #surpriseegg, #disney, #elsa, #pawpatrol, #unboxing, #fidgetspinner and any other tags I can think of that might pop my video into the rankings for a search of that kind. I’d pick an enticing thumbnail, but not one that had anything objectionable in it – I don’t want you (the parent) to notice it before it plays and remove it from the list. Once I start gaining views from kids who have either clicked on my prank video thinking it’s legit or just allowed the playlist to roll on through, I’ll start getting higher in the ranks. It doesn’t matter to youtube what my content is, the dumb machine will only count my subscribers, ad watches and views and start to pay me money for it. And that is often the crux of the whole cycle – video advertising revenue.

Basically, there are small teams of people out there creating this weird, dark, eclectic content with the sole purpose of exploiting both your kids inability to discern between good content and bad, as well as the automated search algorithms that serve the content. If you know what it requires to get your video up on the top of the SEO rankings on YouTube, you’ll get views whether it’s decent or not. Some really clever people use 3D animation as it’s easily recyclable, has a whole host of well-known character models available to use, and can be endlessly remixed and re-edited to fit SEO trends and current fads because the algorithms don’t discriminate. And often, neither do your kids – it’s just the next video in a playlist of similar content.

So why is this such a major issue? Because of the nature of the victims and the insidious way they are being victimised and exploited for gain. While there is an argument to be made against violent video games and online pornography, neither of those media pretend to be something else or utilise algorithms and SEO to specifically target children; a more undiscerning and uneducated audience. Which segues nicely on to the next important point: by not managing this issue right now, Google and YouTube are complicit in this exploitation of your kids. Sure, while they aren’t creating the content themselves, the systems and architecture they have wrought and use to generate income are regularly being used to abuse children on a massive, global scale. Just as they have a responsibility to deal with hate speech and radicalisation on their platforms, they should be protecting children who use them from abuse too.

While it’s easy to dismiss much of this content as trolling or mean-spirited pranks, the sheer amount of garbage and nonsensical content on YouTube points to something much darker and more pervasive – the systems we use on a daily basis, that were invented for information sharing and to sustain ourselves, are being used against us in a systematic and automated way to generate income for others. Beyond that, society at large is having problems discussing these issues (let alone recognising them) because the systems that we use every day and take for granted (yet often don’t understand) are being exploited by those with the knowhow to do so.

So, how do you protect your kids from being exploited and served this garbage? Well, it’s easy to throw the baby out with the bathwater and say ‘Parent your kids yourself rather than sit them on YouTube’, but that isn’t practical for everyone to do. Besides, sometimes you really just need a break, and a playlist of prepped videos provides some quiet time and a bit of respite. So; a few tips on making YouTube safer for your young-uns: